-

-

-

Loading

Loading

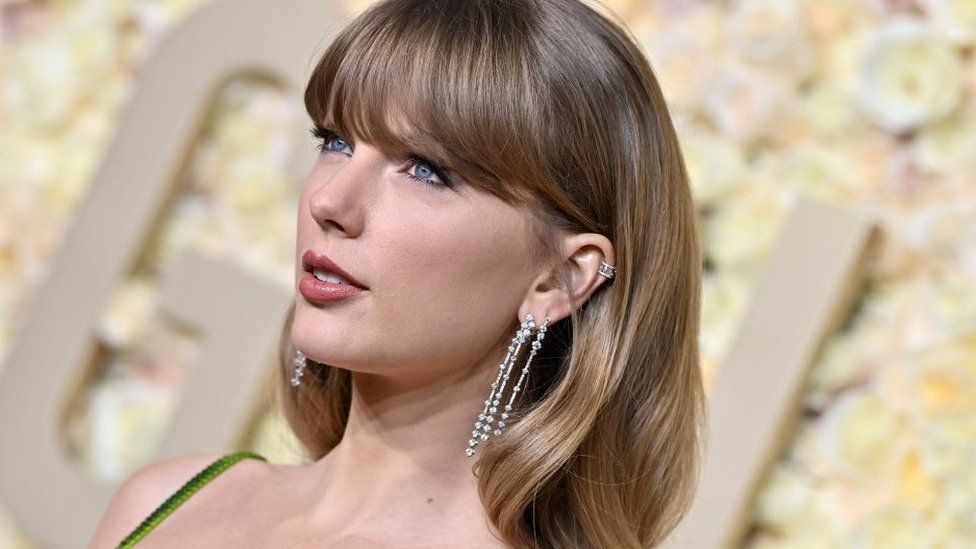

Social media platform X has temporarily blocked searches for Taylor Swift after explicit AI-generated images of the singer started spreading on the site. X's head of business operations, Joe Benarroch, stated that this action was taken to prioritize safety. When users try to search for Swift on the site, a message appears stating that something went wrong and to try reloading. The fake graphic images gained viral attention and were viewed by millions, sparking concern from both US officials and fans of the singer. Fans took action by flagging posts and accounts sharing the fake images and flooding the platform with real images and videos of Swift to protect her. X, previously known as Twitter, responded by issuing a statement on Friday condemning the posting of non-consensual nudity on their platform, which is strictly prohibited. The platform's teams are actively removing the identified fake images and taking appropriate actions against the responsible accounts. It remains unclear when X initiated the blocking of searches for Swift or whether they have taken similar actions with other public figures in the past. X's decision to block searches for Swift was made with an abundance of caution as they prioritize safety. The issue gained attention from the White House, who expressed alarm at the spread of AI-generated photos and highlighted the negative impact on women and girls. White House press secretary Karine Jean-Pierre called for legislation to address the misuse of AI technology on social media platforms and emphasized their responsibility to ban such content. US politicians are also pushing for new laws to criminalize the creation of deepfake images, which use AI to manipulate someone's face or body in videos. A study in 2023 revealed a 550% increase in the creation of doctored images since 2019, fueled by the advancement of AI technology. While there are currently no federal laws against the sharing or creation of deepfakes in the US, some states are taking steps to address this issue. In the UK, the sharing of deepfake pornography was made illegal through the Online Safety Act in 2023.