-

-

-

Loading

Loading

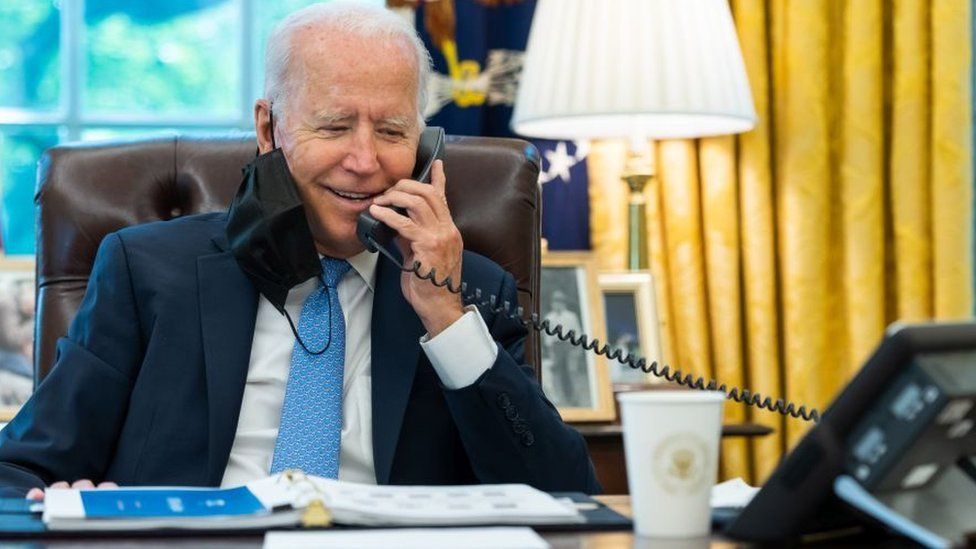

A recent incident involving a recorded message urging voters to save their vote for the November election has raised concerns about AI-powered audio-fakery. The voice in the message, which sounded like President Joe Biden, was actually a convincing AI clone. This incident highlights the growing power of AI technology, which was demonstrated to me by a cybersecurity company called Secureworks. They showcased an AI system capable of calling me and imitating a representative from the company, including their voice. During the demonstration, there were certain pauses and inconsistencies that made it clear this was not a real person. However, the potential for AI to generate realistic fake voices is a worrying development. The technology used by Secureworks was a freely available commercial platform that can make millions of phone calls per day using AI agents that sound human. The platform is marketed for use in call centers and surveys. Another demonstration showed that AI can create credible copies of voices based on small snippets of audio pulled from YouTube. From a security standpoint, the ability to rapidly deploy thousands of conversational AI systems is concerning. Voice cloning, in particular, is seen as a significant threat. With major elections taking place this year in countries like the UK, US, and India, there are fears that audio deepfakes, sophisticated fake voices created by AI, could be used to spread misinformation and manipulate democratic outcomes. Politicians in various countries have already been targeted by audio deepfakes, and the National Cyber Security Centre in the UK has warned about the risks they pose to the next election. Verifying audio deepfakes is a challenge, and by the time they are exposed, they have often already been widely circulated. As we approach a year with numerous elections, there is a call for social media platforms to strengthen their efforts in fighting disinformation and for developers of voice cloning technology to consider potential misuse before releasing their tools. The UK's election watchdog, the Electoral Commission, has expressed concerns about the impact of emerging AI technologies on the trustworthiness of information during elections. They have collaborated with other watchdogs to better understand the opportunities and challenges associated with AI. While these concerns are valid, experts also warn against excessive cynicism that can lead people to doubt reliable information. It is important to strike a balance and raise awareness about the dangers of AI without eroding public trust in legitimate sources of information.